Key takeaways

- A data quality score gives you a single, trustworthy number, from 0 to 100, to judge bank statement ingestion before it hits your books.

- For Indian finance teams, diverse statement formats and GST, TDS complexities make quality scoring essential, not optional.

- Measure across four dimensions, completeness, accuracy, timeliness, and consistency, then weight scores to create a composite quality score.

- Use atomic, row and column checks, map exceptions to business risk, and enforce hard gates, zero critical exceptions allowed.

- Monitor trends by bank and entity, and respond fast to template shifts signaled by falling scores, see the Supervisory Data Quality Index narrative for context.

- Automate wherever practical, pair domain rules with tools like AI Accountant to scale checks and remediation.

- Good governance with clear roles, audit trails, and monthly reviews converts data quality into a repeatable, defensible process.

What is a Data Quality Score and Why Indian Finance Teams Need It

A data quality score is a single indicator of trust in your extracted bank data, like a credit score for transactions. The typical range is 0 to 100, and anything below 95 should raise flags before you post to ledgers. In India, with multiple statement formats, GST and TDS entries, and hybrid workflows between Tally and cloud tools, the need is acute. RBI continues to push banks to raise their Supervisory Data Quality Index, yet the quality that finally reaches your accounting system still depends on your ingestion pipeline.

Without a quality score, errors surface at reconciliation, not ingestion, which means late discoveries, rework, and stressed client relationships.

With a score, you move from reactive cleanups to proactive prevention. That shift protects GST compliance, avoids vendor mismatches, and sustains credible dashboards.

Treat it as a non negotiable gate between raw statements and your books.

Core Dimensions of Bank Data Quality Measurement

Completeness: The Foundation of Trust

Completeness means full coverage. All expected rows captured, no duplicates, no missing days, mandatory fields present, opening balance matching prior closing balance. A practical metric, (rows captured minus duplicates) divided by rows expected, then multiplied by 100, capped at 100. When HDFC changes a layout mid year, your completeness score drops, that is your early warning.

- No missing dates in the period

- Mandatory columns in every row, date, amount, balance, narration

- Opening balance equals prior day closing balance

Accuracy: Getting the Details Right

Accuracy validates the content itself, not just presence. Focus on critical fields, because a misread amount is more damaging than a fuzzy narration. Typical issues include weekend posting anomalies, FX notation confusion, and misclassified TDS entries. For method, start from the Data Quality Framework and practical patterns from data quality in banking. Compute, correctly validated critical fields divided by total critical fields, then multiplied by 100.

Building Your Quality Scoring Framework

Row-Level and Column-Level Checks

Atomic checks roll up into your overall score. Each check is pass or fail, failed checks become exceptions with risk tags.

- Duplicate detection using amount, date, and narration matching

- Invalid dates or negative balances

- Micro amount floods that signal testing transactions

- Round amount anomalies that indicate manual entries

- Weekend posting patterns specific to your bank

- Correct tagging of foreign exchange charges, refunds, and fees

- TDS and interest recognition patterns

- Each column has the right data type

- Headers detected correctly

- Mandatory fields present in every row

- Identifiers like UPI and IMPS markers are normalized

For a structured blueprint, revisit the Data Quality Framework.

Exception Severity and Business Risk Mapping

Not all errors are equal. Map exceptions to severity with explicit gates.

- Critical, balance discontinuities, missing transactions

- High, date errors, amount mismatches

- Medium, narration loss, vendor identification issues

- Low, minor formatting issues, token drops

Hard rules, zero critical exceptions allowed, and at most three high severity exceptions. Weight critical issues heavily in your composite score. Reference implementation ideas, Data Quality Framework.

Implementation Guide for Indian Banks

Handling India-Specific Edge Cases

India has unique statement quirks that global tools miss.

- Merged account PDFs mixing personal and business flows

- Password protected statements requiring human steps, track frequency

- Partial statements, adjust completeness denominator to period provided

- GST and TDS entries vary by bank, SBI narrations differ from ICICI

- Cheque returns and reversals create negative entries that are valid

- Weekend and holiday postings bunch on Mondays, tune duplicate rules

Document bank specific rules, review quarterly as templates evolve.

Setting Up Monitoring and Trend Analysis

Scores tell you today, trends tell you whether tomorrow will be worse. Track month on month metrics per bank and entity, set alerts for drops below thresholds, and account for seasonality. For regulatory context, see the RBI circular on data quality index and this Economic Times report.

Practical Scoring and Governance Framework

Weight Distribution and Scoring Logic

Use a balanced model that reflects business impact.

DimensionWeightWhy this weightCompleteness40%Missing data disrupts reconciliation and filingsAccuracy40%Wrong numbers contaminate books and GSTTimeliness10%Freshness drives dashboards and cash decisionsConsistency and Validity10%Format conformance enables automation

Composite, completeness times 0.4 plus accuracy times 0.4 plus timeliness times 0.1 plus consistency times 0.1. Acceptance gates, score at least 95 with zero critical exceptions to post, score between 90 and 94 goes to quarantine, score below 90 is rejected and remediated. For sector updates, see BFSI Economic Times coverage.

Governance and Audit Trail Requirements

Define clear roles, Preparer runs extraction, Reviewer validates and approves exceptions, Approver signs off posting.

- Retain raw files with timestamps

- Keep parsed outputs with extraction confidences

- Maintain exception logs and remediation notes

- Store an immutable change log

Adopt a monthly review cadence, first Monday, and update rules based on findings. Reference, Data Quality Framework and IDRBT Data Quality Framework.

Step-by-Step Remediation Workflow

Intake Triage Process

Verify statement completeness, page count, and OCR legibility. Confirm the requested date range, check for gaps, and prefer netbanking downloads over forwards. For scanned PDFs, improve image clarity first, often a 20 to 30 percent lift in extraction quality.

Data Repair and Validation Steps

Step 1, Template Selection

Choose the correct bank and account type template, HDFC savings versus HDFC current may differ.

Step 2, Header or Footer Cleanup

Strip headers, footers, and promos that confuse parsers.

Step 3, Balance Reconciliation

Fix balance breaks by adding missing rows or correcting math.

Step 4, Deduplication

Apply fuzzy matches on amount, date, and narration to catch near duplicates.

Step 5, Date Normalization

Standardize DD or MM or YY formats, disambiguate day and month.

Step 6, Token Recovery

Extract UPI IDs, cheque numbers, IFSC from narrations and preserve them.

After each fix, recompute exceptions and iterate until thresholds are met. Background reading, Data Quality Framework and data quality in banking.

Root Cause Analysis and Prevention

Document the root cause, new bank format, OCR failure, or manual issue. Update rule engines, automate recurring fixes, and maintain a searchable knowledge base of issues and resolutions. Share learnings across teams to accelerate response the next time a bank changes a template.

Tools and Technology for Quality Management

Automated Quality Checking Tools

Manual checks do not scale, automation is essential.

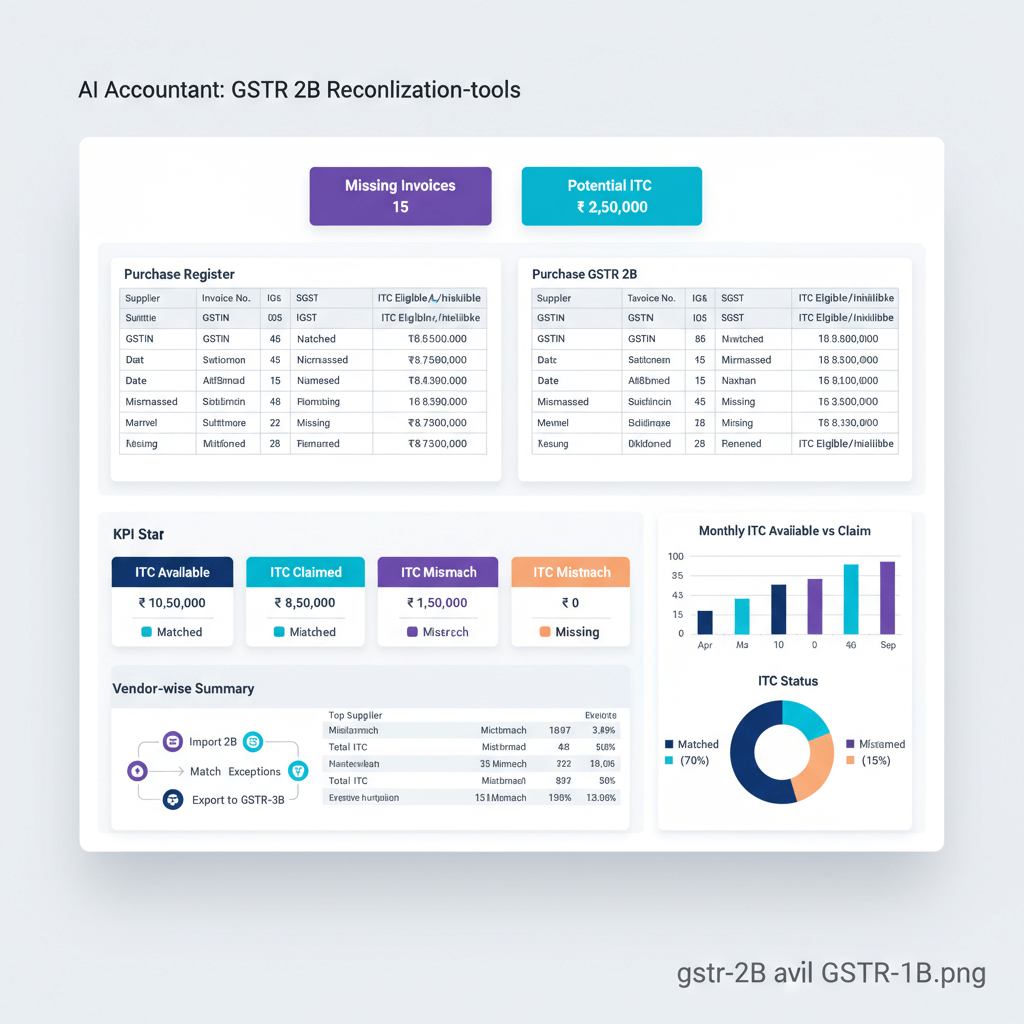

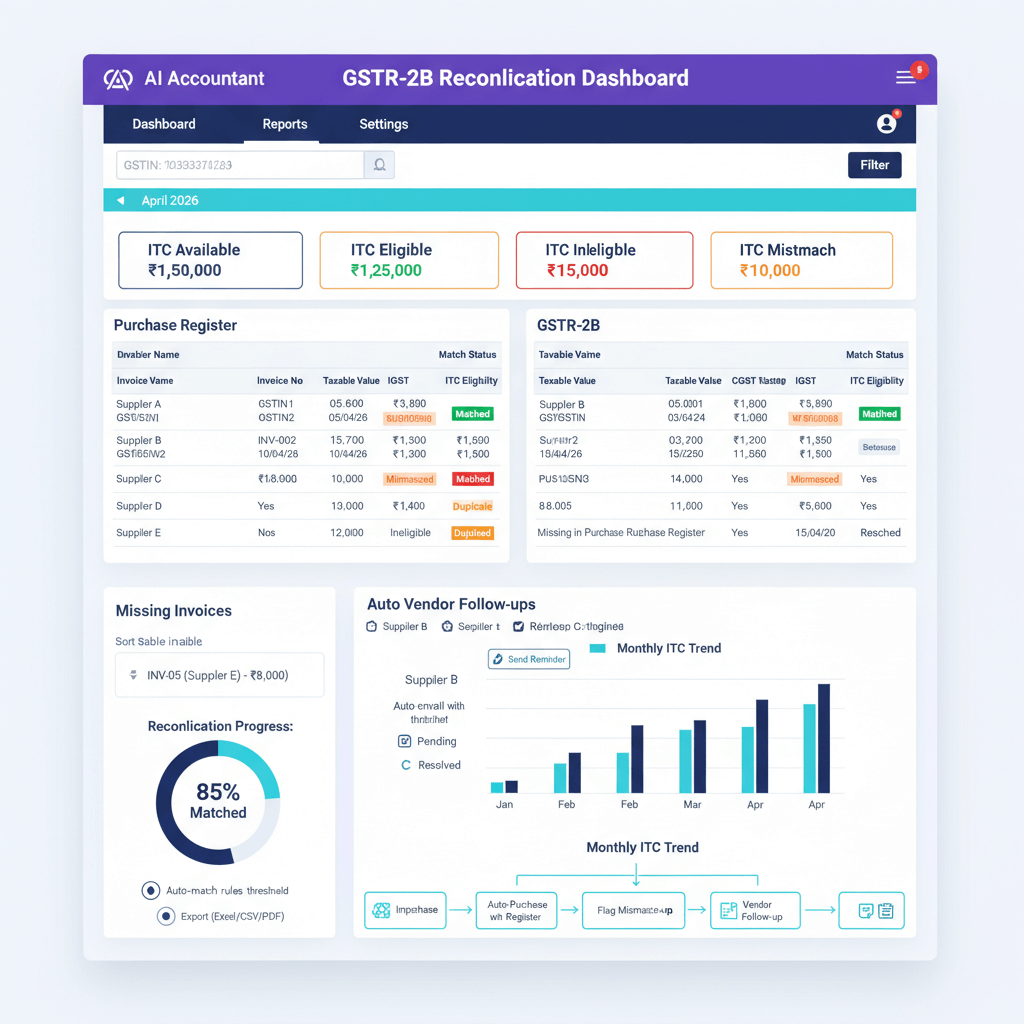

- AI Accountant, specialized for Indian formats with built in quality scoring, automated exception detection, and Tally or Zoho integrations

- QuickBooks, bank feed validation with limited Indian bank coverage

- Xero, effective quality checks but struggles with Indian PDFs

- FreshBooks, basic validation, fit for simple workflows

- Zoho Books, decent Indian bank support with some quality checks

For OCR and parsing, choose engines tuned to Indian date formats, GST or TDS markers, and noisy scans. You can complement commercial tools with targeted Python rules or Excel macros, and catch the bulk of common issues quickly.

Integration with Accounting Systems

Gate postings with quality. Use staging tables, allow only transactions above thresholds to sync to Tally or Zoho, and route medium quality data to a review queue. Build dashboards so CFOs can see scores by bank and entity, and log every approval or rejection to satisfy auditors.

Best Practices and Common Pitfalls

What Top CA Firms Do Differently

- Maintain bank specific rule libraries

- Track quality per client and per bank

- Train teams to remediate quickly

- Communicate issues early to clients

- Price services by data quality tiers

Leaders treat data quality as a service promise, not a background task.

Mistakes to Avoid

- Setting perfection thresholds that stall operations, 95 percent is practical

- Ignoring slow declines in score trends

- Skipping documentation of exceptions and decisions

- Applying uniform rules to all banks despite their differences

- Automating prematurely, learn with manual checks first, then codify

Real-World Case Studies

Large Manufacturing Company

A Pune manufacturer with 50 plus accounts across 8 banks cut reconciliation time from 15 days to 3 by enforcing completeness at least 98 percent, accuracy at least 96 percent, and zero critical exceptions. GST errors dropped 85 percent, and vendor disputes fell 60 percent. The shift was catching issues at ingestion, not at reconciliation.

CA Firm Managing 100+ Clients

A Mumbai CA firm scaled using a tiered framework, Tier 1 at 98 percent, Tier 2 at 95 percent, Tier 3 at 90 percent, automated checks with AI Accountant and scripts, and embedded quality scores in onboarding. Result, 40 percent fewer rework hours, 30 percent more clients with the same team, and client satisfaction up from 7.2 to 8.8.

Growing Fintech Startup

A Bangalore fintech added real time score monitoring, automatic alerts on drops, and daily reports. They detected a critical bank format change in two hours, avoided misreporting investor metrics, and reduced finance overtime by 50 percent.

Future of Bank Data Quality in India

Upcoming Regulatory Changes

RBI is pushing toward higher quality benchmarks, as reflected in the Supervisory Data Quality Index progression. Account Aggregator pipes will reduce PDF parsing issues, yet bring API reliability challenges. Expect tighter GSTN alignment and, in time, mandated quality reporting for listed entities.

Technology Trends to Watch

- AI anomaly detection that flags pattern shifts and template changes

- Blockchain backed audit trails for immutable scoring records

- Real time quality scoring, not batch reviews

- Predictive quality analytics, forecast trouble before it appears

- Industry benchmarks that allow peer comparisons

Building Your Implementation Roadmap

Month 1: Foundation

- Start with one bank and entity, pick the most problematic case

- Define dimensions and weights, keep it simple

- Implement completeness and accuracy checks first

- Run scores in parallel without blocking postings

- Document every issue and fix, build your playbook

Month 2: Expansion

- Add two banks, apply learnings

- Automate high frequency checks

- Set provisional thresholds, tighten over time

- Train the full team on reading and acting on scores

- Publish a quality dashboard for stakeholders

Month 3: Optimization

- Cover all critical banks and entities, 80 percent volume

- Operationalize remediation SOPs

- Enable trend monitoring and alerts

- Integrate with accounting systems and block poor quality from posting

- Refine weights based on observed business impact

Ongoing: Continuous Improvement

- Monthly quality reviews, first Monday

- Quarterly rule updates as formats change

- Annual reassessment of the scoring model

- Share learnings across teams and peers

- Invest in ML, predictive analytics, and real time scoring as you mature

Conclusion

Building a data quality score for bank ingestion helps Indian finance teams catch issues before they cascade. Start small, define simple metrics, and build momentum. Remember, done today beats perfect next quarter. As regulations evolve and bank formats shift, a disciplined score, plus trends and governance, will turn month end from firefighting into a smooth, predictable close. For broader context on sector wide momentum, revisit the evolving Supervisory Data Quality Index.

FAQ

How should a CA define critical versus high exceptions in a bank ingestion quality score?

Define critical exceptions as those that can change reported balances or create compliance risks, for example, balance discontinuities or missing transactions. High exceptions affect correctness but may be resolvable with limited risk, for example, date errors or amount mismatches. Set a hard rule of zero critical exceptions allowed. Tools like AI Accountant let you codify this with exception categories and acceptance gates.

What is an acceptable accuracy threshold for Indian bank statements in practice?

For most ledgers, 95 to 97 percent accuracy on critical fields is practical. Below 95, rework costs spike. Segment by field importance, amounts and dates carry the most weight, narration is secondary. An AI driven checker such as AI Accountant can weight fields and compute a composite accuracy score automatically.

Can I compute completeness when the client sends a partial date range?

Yes, adjust the denominator to the provided period. Verify that the first opening balance aligns with the prior period closing, and that no dates are missing within the partial range. If you rely on OCR, add a confidence floor to avoid counting unreadable rows, which systems like AI Accountant expose per row.

How do I prevent duplicate postings across multiple bank statement files?

Use a composite key of amount, date, normalized narration tokens, and a sliding window of one to three days to account for weekend posting. Fuzzy match narrations after removing boilerplate strings. See this approach to Duplicate detection, which many CA firms adopt inside their ingestion pipelines.

What data checks are specific to Indian GST and TDS in bank statements?

Check for GST markers, SGST, CGST, IGST tokens, and TDS recognition patterns in narrations. Ensure mapping to correct ledgers and tax heads. Normalize UPI or IMPS tokens, see guidance on Identifiers like UPI and IMPS markers. AI Accountant includes India specific token libraries to standardize these.

How do I evidence to auditors that bad data never reached the books?

Maintain a gate with quality thresholds, keep staging tables, and store exception reports with timestamps. Preserve raw files, parsed outputs, and immutable change logs. An audit friendly trail is easier if your tool, for example AI Accountant, records every decision, who approved, and the score at approval.

What is the recommended scoring formula for a composite data quality score?

Start with, completeness times 0.4 plus accuracy times 0.4 plus timeliness times 0.1 plus consistency times 0.1. Enforce gates, at least 95 to proceed, between 90 and 94 quarantine, below 90 reject. Adjust weights after three months of trend data, based on which issues created real business impact.

How should I monitor quality trends across banks and clients?

Track month on month scores at three levels, per bank, per entity, and portfolio wide. Alert on sudden drops, for example five points in a week, which often signals a template change. Build a simple dashboard and a daily digest. Many firms use AI Accountant to push alerts into Slack or email when scores fall below 95.

What remediation workflow minimizes rework when scores fail?

Follow a triage, repair, and validate loop. First, confirm file integrity and date range, then apply repairs, header cleanup, deduplication, balance reconciliation, and date normalization. Recompute exceptions after each step. Close with a root cause note and a rule update to prevent recurrence. This loop is built into tools like AI Accountant.

How do I quantify the ROI of implementing a quality score in my practice?

Measure rework hours before and after, reconciliation cycle time, GST return adjustments, and client escalations. Many CA firms report 30 to 50 percent fewer rework hours and faster closes within two to three months. Include soft benefits, auditor confidence, fewer vendor disputes, and more predictable month end.

Is OCR reliable enough for scanned Indian bank statements, or should I insist on CSVs?

Insist on CSVs where possible, but you can reach strong reliability with tuned OCR that understands Indian formats. Improve scan quality first, then parse with a banking aware engine, for example For OCR and parsing. Always pair OCR with quality scoring and exception gates to catch residual errors.

How do I handle Monday bunching and weekend postings without false duplicate flags?

Use a matching window across Friday to Monday, normalize timestamps to dates, and add narration token signatures so Monday bulk postings do not collide. Weight amount plus token matches higher than date alone. Configure the duplicate rule in your AI tool, for example AI Accountant, to allow weekend windows.

Can I use the quality score to drive client pricing tiers?

Yes, many CA firms do. Publish thresholds per tier, for example 98 percent for premium, 95 percent for standard, 90 percent for basic. Clients with frequent exceptions that require remediation consume more hours, and the pricing should reflect this objectively. A transparent scorecard from AI Accountant helps align expectations.

-01%201.svg)